Congratulations to Daeho and Seunghui

[Title]

Camera-LiDAR Extrinsic Calibration using Constrained Optimization with Circle Placement

[Journal]

IEEE Robotics and Automation Letters (RA-L) Vol. 10, Issue:2, pp. 883-890, 2025.

[Authors]

Daeho Kim, Seunghui Shin, and Hyoseok Hwang*

[Motivate]

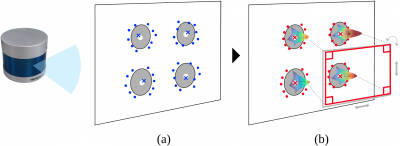

In target-based camera-LiDAR extrinsic calibration, accurate feature extraction is crucial for achieving high performance. However, the discrete nature of LiDAR point clouds can lead to inaccurate feature extraction. This research proposes a method to address this issue by generating a probability distribution from the LiDAR point cloud and utilizing the Lagrangian-multipliers of a known target circle placement to extract more accurate features. This approach is expected to enhance the performance of the calibration process.

[Key Figures]

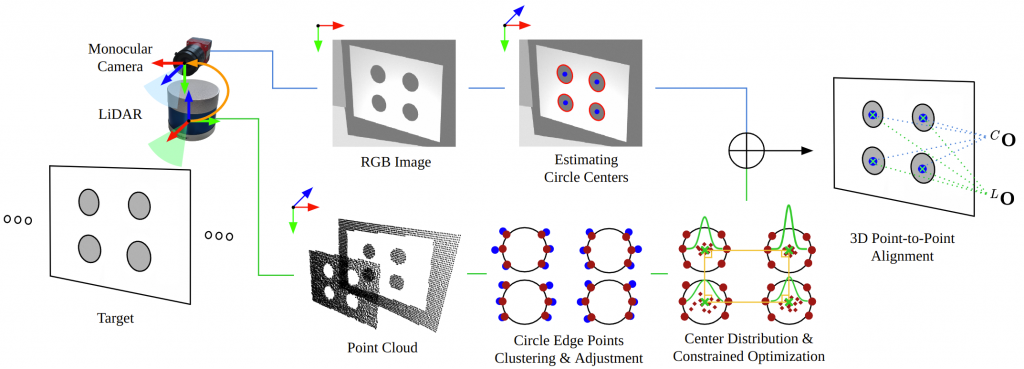

Overview of the monocular camera-LiDAR extrinsic calibration framework. A target is positioned within the FOV of the sensors and captured from multiple perspectives. This scenario is an example of a single image and LiDAR scan. After identifying correspondences, the LiDAR to camera extrinsic parameters are determined through 3D point-to-point alignment.

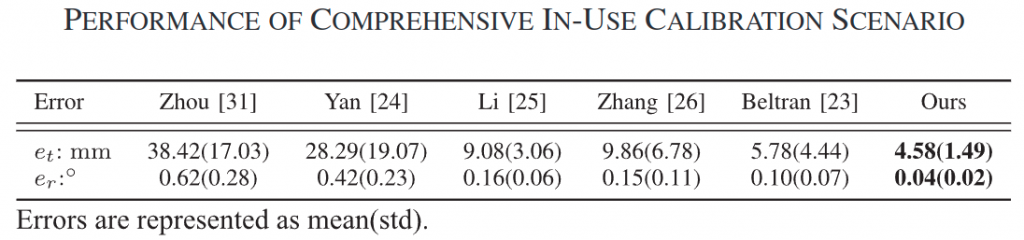

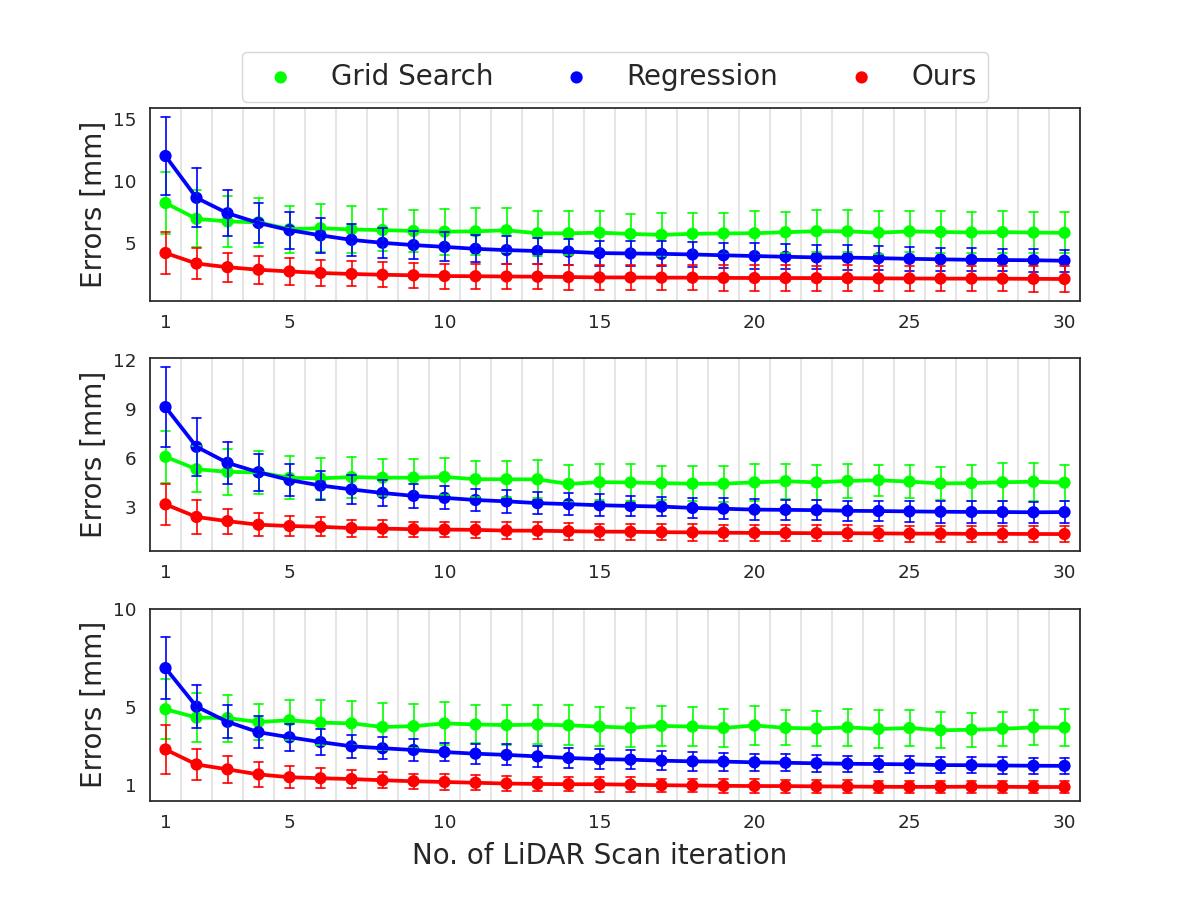

[Key Results]